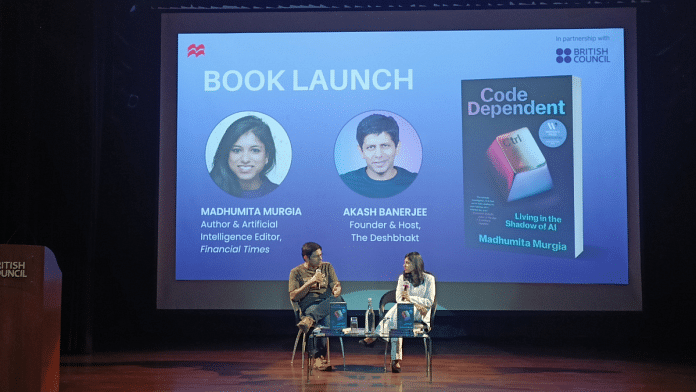

New Delhi: As the artificial intelligence editor at the Financial Times, Madhumita Murgia decided to explore her subject from the human perspective, which she calls the “sharp end of AI”. The result is an expansive book that covers everything from AI digital sweatshops in Nairobi and data mining to the looming spectre of unregulated AI in public life.

“Artificial intelligence is neither artificial nor intelligent,” said Murgia during a recent discussion at the British Council in New Delhi.

In her book Code Dependent: Living in the Shadow of AI, she starts with an anecdote about an article she wrote in 2014 on data mining by websites. The rabbit hole she fell into while researching ‘cookies’ that mine and collect personal information was her first tryst with the data industry.

“I have seen AI grow from being a fringe technology to what it is today, and I’ve grown with it,” she said in conversation with YouTuber Akash Banerjee.

Her interest in the field took her to Nairobi, Kenya, where multinational companies hire cheap labour to help train their AI software. One of the employees was tasked with tagging objects for driverless cars to function — street signs, other vehicles, pedestrians, roads.

“There’s sort of a magical cape around the word ‘artificial intelligence’, but it is not as glamorous as we think it is,” explained Murgia. These “digital sweatshops” form the backbone of the data collection on which AI relies.

Murgia also said that AI largely works with statistics, not sentience.

“Generative AI like DALL-E, or large language models (LLMS) like ChatGPT, are trained on an obscene amount of data and learn to identify patterns,” she said. The way humans define intelligence – to be able to reason, make decisions, have emotional capacity – is entirely missing in artificial intelligence today.

AI is also not a “self-learning” technology like many claim it is; instead, it needs to be taught using human labour, the editor added.

Who holds the power with AI?

Code Dependent explores the impact of AI rather than the technology behind it. Murgia starts every chapter of her book with a human anecdote to explain how AI systems pervade our jobs, healthcare, education, and public infrastructure—and how we’re not ready for it yet.

In Amsterdam, the police used an AI application called ProKid to predict whether children were at risk of criminal behaviour based on their family situation, experiences in school, the number of times they missed classes, and other personal data. This machine-learning application, made by academics together with the Dutch police, actually made children feel stigmatised and targeted and led to them committing more crimes, found Murgia. There were also protests in the Netherlands against racial prejudice that could arise with the use of an application like ProKid.

“The problem with AI is that there’s no gap between when we’re developing it and when we’re rolling it out. We seem to be in a rush to remove humans from the loop, and that never works well,” said Murgia. Other forms of technology, like the internet, were used by the United States army well before it was rolled out to the general public, providing time to develop regulations and safeguards.

Throughout the discussion, Murgia kept returning to the Silicon Valley in California, where a small group of homogeneous individuals don’t just lead the biggest software companies in the world but also make decisions that impact everybody who uses AI. She pointed to the huge concentration of power in the AI industry and how research, financial and data requirements act as barriers that keep this technology in the hands of a select few. All creators have their own set of values, and AI is imbibed with the values of a select few.

“I genuinely believe that AI can do a lot of good. But we need to keep humans in the loop, and we need to include all sections of society in the decision-making,” said Murgia.

What is the future of AI?

A Silicon Valley professional that Murgia interviewed for her book told her that it would be an amazing future when AI will be able to make up and tell bedtime stories to our children.

“To me, frankly, that would be a horrible future and I hope I’m not in it when it happens,” she said to the audience.

Murgia remained steadfast in her assertion that AI can be utilised for human welfare, but only if power is decentralised and people from all sections of society remain active decision-makers.

(Edited by Humra Laeeq)