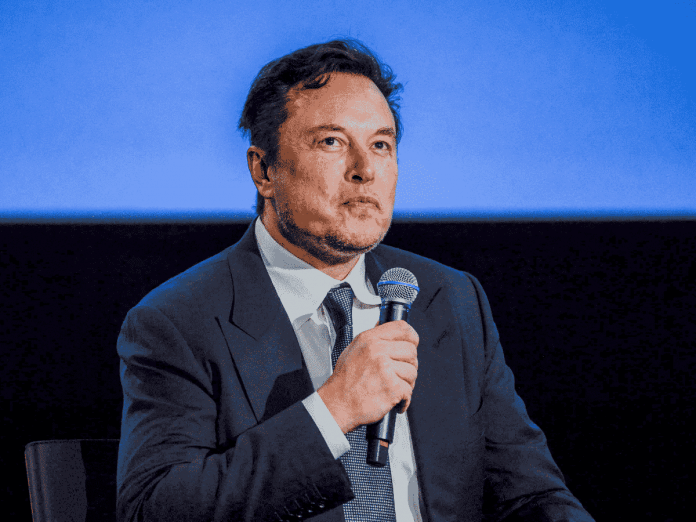

New Delhi: A group of industry executives and stakeholders — including notable names like American entrepreneurs Elon Musk and Steve Wozniak and author Yuval Noah Harari — have called for a six-month pause in developing systems more powerful than OpenAI’s newly-launched GPT-4, citing possible “profound risks to society and humanity”.

Microsoft-backed OpenAI recently released a new version of its popular artificial intelligence chatbot, dubbed GPT 4, which it said is more creative and collaborative than ever before and surpasses ChatGPT in its advanced reasoning capabilities.

Musk was one of the co-founders of OpenAI.

“It can generate, edit, and iterate with users on creative and technical writing tasks, such as composing songs, writing screenplays, or learning a user’s writing style,” OpenAI had said.

“Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable,” said the open letter by the Future of Life Institute (FLI), which has listed Twitter Chief Executive Officer Musk as an external advisor, among several other prominent personalities.

Over 1,300 people have signed the open letter.

According to its website, the FLI is an independent non-profit organisation funded by a range of individuals and organisations “who share our desire to reduce global catastrophic and existential risk from powerful technologies”.

Elon Musk had donated $10 million to FLI in 2015. According to Reuters, the non-profit is also funded by the London-based group Founders Pledge, and Silicon Valley Community Foundation.

Also Read: Can AI make good CEOs? A Hong Kong gaming firm may have answers

‘Immediate pause on AI development’

The letter states that AI systems with human-competitive intelligence can pose profound risks to society and humanity, as shown by extensive research and acknowledged by top AI labs.

“As stated in the widely-endorsed Asilomar AI Principles, Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources,” it said. “Unfortunately, this level of planning and management is not happening, even though recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one — not even their creators — can understand, predict, or reliably control.”

The letter quoted a recent statement by OpenAI saying that “at some point, it may be important to get (an) independent review before starting to train future systems, and for the most advanced efforts to agree to limit the rate of growth of compute used for creating new models”.

“We agree. That point is now,” the letter said, adding that: “Therefore, we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4. This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.”

The letter added that AI labs and independent experts should use this pause to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts. These protocols should ensure that systems adhering to them are safe beyond a reasonable doubt.

“This does not mean a pause on AI development in general, merely a stepping back from the dangerous race to ever-larger unpredictable black-box models with emergent capabilities,” it said.

‘AI summer’

The letter noted that contemporary AI systems are now becoming human-competitive at general tasks, and questioned whether we should “let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilisation?”

Such decisions, the letter said, must not be delegated to unelected tech leaders.

AI research and development should be refocused on making today’s powerful, state-of-the-art systems more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal, the letter said, adding that in parallel, AI developers must work with policymakers to dramatically accelerate development of robust AI governance systems.

“Humanity can enjoy a flourishing future with AI. Having succeeded in creating powerful AI systems, we can now enjoy an ‘AI summer’ in which we reap the rewards, engineer these systems for the clear benefit of all, and give society a chance to adapt. Society has hit pause on other technologies with potentially catastrophic effects on society. We can do so here. Let’s enjoy a long AI summer, not rush unprepared into a fall,” it concluded.

Recently, Musk has said that “AI stresses me out,” even as his own company Tesla has plans to leverage artificial intelligence.

On Thursday, Musk tweeted an AI joke, which possibly reveals his thoughts and fears about AI in its current form, which read: “Old joke about agnostic technologists building artificial super intelligence to find out if there’s a God. They finally finish & ask the question. AI replies: ‘There is now, mfs!!(sic)’”

(Edited by Uttara Ramaswamy)

Also Read: Cybercriminals using ChatGPT to create more convincing online scam content: Norton