New Delhi: American scientist John Joseph Hopfield and British-Canadian computer scientist Geoffrey Hinton have been awarded the 2024 Nobel Prize in Physics for using physics tools to develop methods that helped lay the foundation for machine learning with artificial networks, and demonstrating how physics can be used to find patterns in information, and sort and analyse huge amounts of data.

Hopfield created a network that could save information and then reconstruct it. Hinton, popularly known as the Godfather of Artificial Intelligence, invented a machine that could sort and interpret information, and create new patterns. In other words, it was an early example of a generative model.

ThePrint explains what machine learning and artificial neural networks are, and how Hopfield and Hinton used the concepts of fundamental physics to pave the way for the development of tools that are invaluable in this age.

How machine learning works using artificial neural networks

Machine learning, which has exploded over the past 15-20 years, is a subfield of artificial intelligence (AI) and is defined as the capability of a machine to imitate intelligent human behaviour and brain’s functions, including memory and learning, through the use of data and algorithms.

Thanks to the work of Hopfield and Hinton, machine learning allows a computer to learn by example, unlike traditional software, which only works based on the instructions it is provided. Through this, systems can perform complex tasks in a way similar to humans’ problem-solving techniques.

Statistical learning and optimisation methods that allow computers to analyse datasets and identify patterns are the basis of machine learning. Such deep learning models are based on artificial neural networks—a series of algorithms that mimic the way biological neurons work, in order to help the machine identify relationships in vast data sets.

In 1957, American physiologist Frank Rosenblatt designed the first neural network.

The inspiration to develop artificial neural networks came from the desire to understand how the brain’s network of neurons and synapses works.

Scientists created artificial neural networks in the form of computer simulations to recreate the brain’s functions. To simulate neurons, they built nodes. To represent synapses—specialised connections between two neurons that allow nerve cells to communicate with each other—scientists formed connections between the nodes, which were given different values.

Canadian psychologist Donald Hebb had hypothesised how connections between neurons contribute to learning. This hypothesis forms the basis of ‘training’ artificial networks.

However, interest in neural networks diminished in the late 1960s because at the time, there was doubt if these technologies would ever be of any real use. Then, in the 1980s, research by several scientists, especially the work of this year’s physics laureates, reignited the world’s fascination with neural networks.

The Hopfield network

In 1982, Hopfield developed a method, which went on to be known as the Hopfield network, using a technique called associative memory.

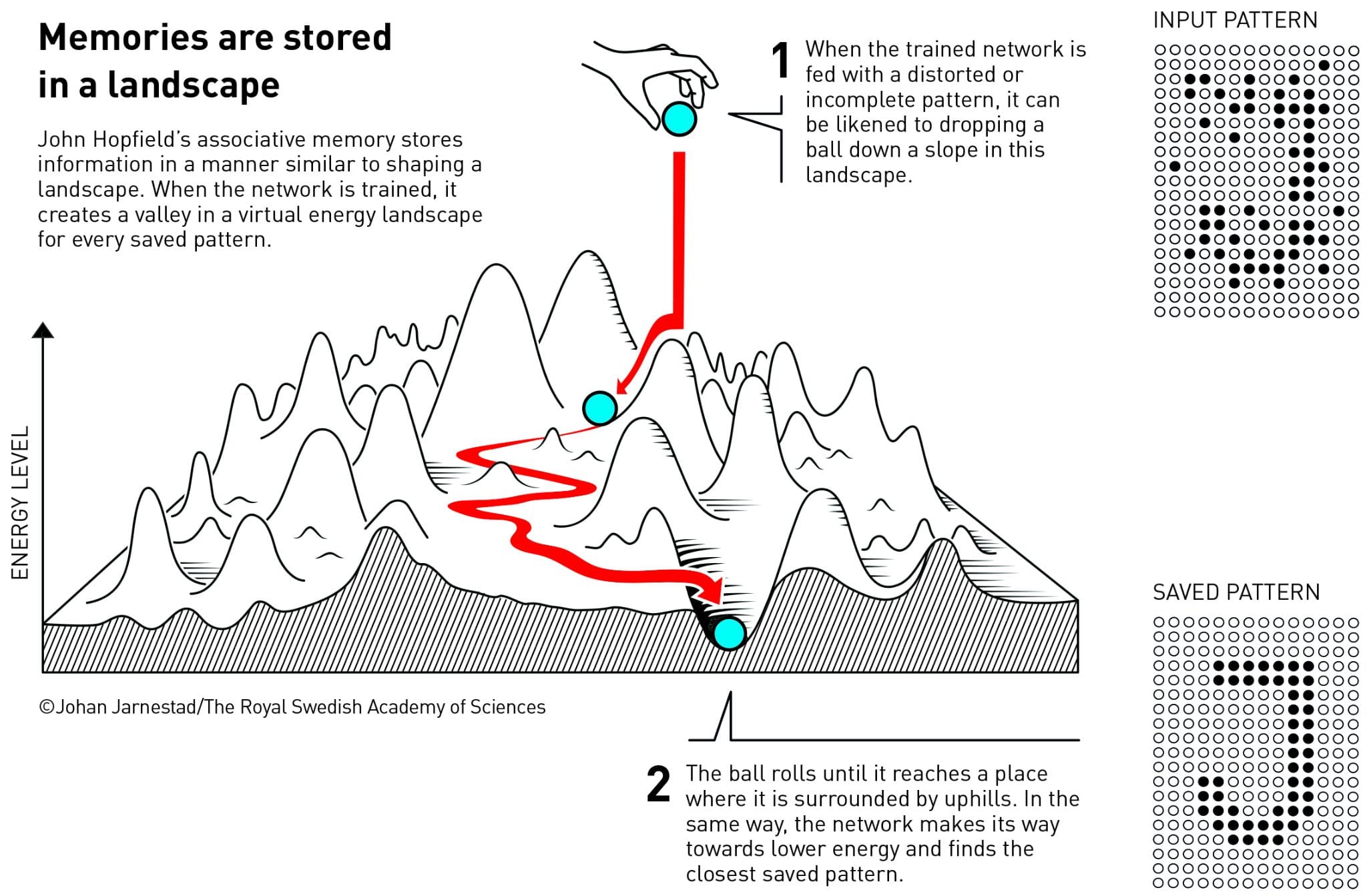

The Hopfield network consists of two patterns—the saved pattern and the input pattern.

An advantage of the network is that it can be used to recreate data that has been distorted, partially erased or has noise. This is because it can store patterns and use an intriguing method to recreate them. Even if the network is fed with an incomplete or distorted version of the pattern it has stored, it can recreate the original one.

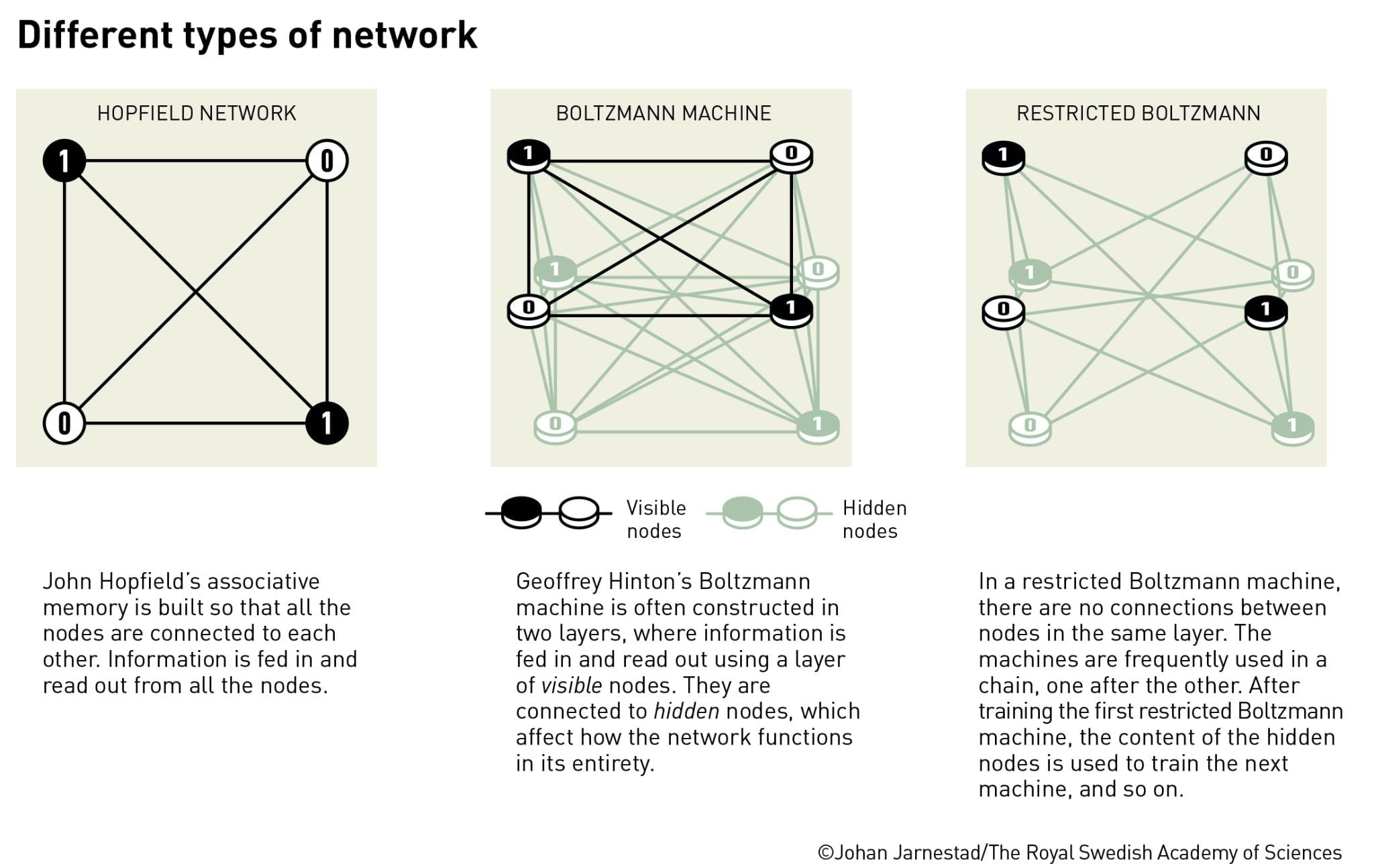

How does this work? In a Hopfield network, nodes are joined together through connections of different strengths.

The node’s value can be 0 or 1, similar to pixels in a black and white picture. With the help of a formula that uses all the values of the nodes and the strengths of the connections between them, the energy of the network is calculated. This energy is similar to that in the spin systems of atoms.

Black is represented by 0, and white by 1. The connections are adjusted in a way such that the saved image has low energy.

When a distorted or incomplete pattern is fed to the Hopfield network, it experiments by changing the values of nodes, one by one, so that the system’s overall energy falls.

For instance, it may experiment by changing a black node in the new image (input pattern) to white. If this change reduces the overall energy of the system, the network actually changes that node from black to white.

For all such nodes, the network continues doing this until nothing more is possible. One can assume that the network will not change the colour of a node in the new image, if this change does not reduce the overall energy.

When the network has changed the colours of only those nodes that need to be changed, the old image (saved pattern) is often reproduced. It means the system has worked on a new image and recreated the old image. Thus, it has “learnt” something.

One can compare the Hopfield network to a landscape consisting of peaks and valleys. Similar to how a ball dropped from the peak of a mountain will roll into the nearest valley and stop there, the Hopfield network keeps moving forward, towards lower energies, until it recreates a pattern that is closest to its saved pattern.

The reason it moves towards a lower energy is that the saved image has low energy, and “recreation” of the old image will be confirmed only when the network’s energy has fallen to that level.

Also Read: Circular trash economy in sight, how Hyderabad project reclaims metals, converts waste to energy

Hinton’s Boltzmann machine

After Hopfield’s work on associative memory was published, Hinton started studying the Hopfield network in depth and decided to build something new. He used ideas from statistical physics, which describes systems that are composed of many similar elements, say, molecules in a gas.

An equation by Ludwig Boltzmann explains that depending on the total amount of available energy, the states of some components in such a system could be more probable than the states of other components in the system. The Boltzmann equation formed the basis of Hinton’s Boltzmann machine, which can be trained to recognise characteristic elements in a given type of data.

This machine uses two different types of nodes—visible and hidden. Visible nodes are fed with information, while the latter are present in a hidden layer and contribute to the energy of the network.

The values of the visible nodes are updated one at a time. As this continues, a state is reached wherein the nodes’ patterns have changed, but the properties of the network as a whole remain the same.

According to the Boltzmann equation, the network’s energy determines the probability of each possible pattern.

Once the machine stops, it creates a new pattern. In this way, the Boltzmann machine is an early example of a generative model.

Therefore, the Boltzmann machine learns, not from instructions, but from examples it is fed with. In order to train the machine, it is constantly updated. When the same pattern is fed to the machine several times, the probability of that pattern occurring increases. Therefore, if a trained machine is fed with new patterns, it can recognise familiar traits in that information.

The Boltzmann machine can also produce new patterns that resemble the examples on which it was trained.

In 2006, Hinton and his colleagues developed a method of pre-training a network using a series of Boltzmann machines in layers. In this method, a restricted machine is used, wherein there are no connections between nodes in the same layer. A series of restricted machines are used in a chain, one after the other.

Once the first restricted Boltzmann machine has been trained, the content of the hidden nodes is used to train the next machine. In this way, the process continues.

In today’s world, the Boltzmann machine can be used to suggest films and television series based on one’s preferences.

Machine learning: Applications for today & the future

Financial services, healthcare companies, transportation firms, pharmaceutical companies and even governments are using machine learning.

Google Translate was trained to “learn” multiple languages through machine learning.

Speech recognition, computer vision (which enables computers to extract useful information from digital images or videos), recommendation engines (mostly used by online retailers to make relevant product recommendations to customers during the checkout process), and online customer service, in which chatbots are replacing human agents, are some common applications of machine learning.

Machine learning algorithms are not only used by governments and businesses, but also in scientific research. Using deep learning algorithms, scientists can detect subtle patterns in the genetic structure of any organism and develop medical treatments using the findings.

In the future, machine learning may help identify diseases more effectively and find treatments for illnesses.

However, no matter how alluring machine learning and artificial neural networks may seem to be, it is important to be mindful of risks associated with the technology, such as data security breaches and misuse of personal information by cybercriminals, among others.

(Edited by Mannat Chugh)

Also Read: Chinese scientists see sweet success, reverse type-1 diabetes in woman using her own stem cells