Bengaluru: Artificial Intelligence is ubiquitous. But when it comes to curbing the tide of extreme speech on social media, it is—for the most part—a silent spectator. With this declaration, professor of media anthropology Sahana Udupa launched into her lecture on how AI technologies are only now being trained to moderate social media content.

She demonstrated how AI systems worldwide fail to recognise derogatory phrases and words deeply rooted in a country’s culture and history. This research formed the basis of her 2023 PhD thesis titled ‘Extreme Speech and the (In)significance of Artificial Intelligence’.

While extreme speech is highly context-dependent, her findings revealed that the datasets powering AI algorithms are not.

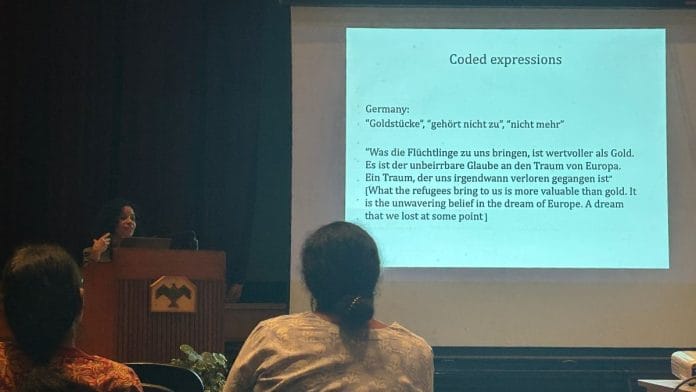

“Especially in India, expressions in English, Hinglish, and Hindi that lack common hateful words but carry implicit meanings—culturally coded phrases, mythological references, indirect dog whistles, and in-group idioms used to target Muslim minorities and Dalits—often go undetected by AI models,” said Udupa during the lecture at her alma mater, National Institute of Advanced Studies (NIAS) Bengaluru earlier this month.

The lecture also took on a cautionary note. “We have seen studies that proved that such disinformation and hate speech have contributed to reduced trust in political institutions, rise of populist parties and also incidents of physical violence,” Udupa said.

Also read: Yuval Noah Harari discusses dangers of AI. Microsoft’s Copilot defends itself

Spotting derogatory speech

Udupa warned that extreme speech has only increased in recent years.

“Even though AI has almost proved to be insignificant in addressing extreme speech, we see increasing reliance on such technologies by corporations and governments as a solution,” she said.

The most recent example she cited was Meta’s discontinuation of professional fact-checking organisations in January 2025. Instead, it will switch to a system of AI-driven community notes—similar to those used by the X platform—instead.

“Our studies show that such models cannot flag extreme speech in languages other than English or those that have local culturally-coded text,” Udupa said.

Her team ran derogatory hashtags and phrases from Indian social media through Perspective API—a machine-learning model used by platforms like Reddit to rate text toxicity.

“Words and phrases like #BioWeapon #BioJihad #TableeghiJamaat that were spread used online during the Covid-19 pandemic to spread misinformation escaped the AI model,” Udupa said.

Other racist expressions and coded language have also been used to target marginalised communities, including Dalits and Muslims, online. These forms of disguised hate speech, Udupa argued, have largely evaded detection by automated moderation systems.

In 2019, Facebook’s AI systems did not pick up an upsurge in rhetoric against religious and ethnic minorities during protests around the National Register of Citizens (NRC) in Assam. She and her team found that derogatory messages aimed at Bengali Muslims were viewed at least 5.4 million times on Facebook.

Yet the platform failed to flag them because the company did not have an algorithm to detect hate speech in Assamese, Udupa said.

Also read: ‘Neither artificial, nor intelligent’—FT editor debunks AI myths

What’s required?

There’s a fine line between monitoring hate speech and suppressing freedom of expression.

Many members of the audience advocated for a regulatory framework to oversee extreme speech on social media. One man gave the example of Samay Raina’s India’s Got Latent which landed in a controversy recently.

“We need AI to trace such content on social media that is misogynistic and sexist and take it down immediately,” the outraged audience member said.

While the professor acknowledged the fine line between jokes and extreme speech, she cautioned that such mechanisms should not become tools for the state to suppress freedom of speech and dissent.

“The intersections between big powerful tech platforms and the state need to be handled carefully. Currently, we don’t have a theoretical framework to deal with the rising collaboration between the two,” Udupa said.

Udupa called for a collaboration between independent fact checkers from different geographies and social media platforms rather than just mechanically feeding machines with data without any context.

“We cannot solely rely on AI to solve society’s problems for us,” the professor warned. “Technology must assist—not replace—human judgement in content moderation.”

(Edited by Theres Sudeep)

The AI models must be trained to detect hate speech in Arabic, Persian and Urdu. These are the three primary languages through which Jihadi literature and ideology is spread. And social media plays an important role in this.

Hence, it’s absolutely essential to detect hate speech in these languages and censor it. Leaving it unchecked simply means encouraging hae mongering and resultant Islamist terrorist activity.