Neurological conditions are the leading global cause of disability, affecting more than three billion people worldwide. Combined with mental health conditions and substance use disorders, they could cost the global economy up to $16 trillion by 2030. Against this backdrop, neurotechnology represents an extraordinary promise. It ranges from clinical applications – such as brain and spinal implants that restore mobility or early-detection systems for Alzheimer’s disease – to consumer devices that support a healthy lifestyle and cognitive performance.

At the same time, the very innovations that hold transformative potential also pose risks to privacy, identity and autonomy, because they can access — and potentially could influence — the most personal layer of human existence: our minds.

What do we need to protect?

Concerns about privacy and, in particular, mental privacy arose significantly with neurotechnologies, given their direct access to neural data and the assumption that meaningful information about an individual’s mental state could be derived from that data. In reality, however, equally sensitive mental state or health status inferences can also be derived from other forms of biometric and physiological data, though with varying degrees of accuracy and specificity, for example:

- Heart-rate variability can indicate stress and emotional states.

- Eye-tracking reveals attention and cognitive load and can be correlated with personality traits.

- Electromyography (EMG) sensors can expose subtle gestures or intentions for simple movements.

Major technology companies are already converging multiple sensors into powerful platforms. Meta’s AI glasses with neural band use EMG; Apple’s Vision Pro integrates eye-tracking with biometric sensors; and Apple has patented electroencephalography (EEG)-enabled AirPods. Powered by AI, these technologies increasingly blur the line between neural and non-neural data, while mapping our mental and health states.

This raises a fundamental question for policymakers: What exactly do we need to protect, rather than what do we want to regulate?

The regulatory challenge is dual: to safeguard human dignity through protections for privacy, identity and autonomy, while enabling innovation that can improve lives. History shows this balance is difficult, but essential. Too narrow a focus (for example, regulating only neural data) risks stifling innovation, while leaving loopholes for protection. Too permissive an approach exposes citizens to the risk of manipulation, surveillance and erosion of privacy.

In this light, the ultimate task for regulators is clear: to protect individuals and communities from inferences about their mental and health states — regardless of the data source — that could undermine their rights, dignity or opportunities, while preserving the incentives needed for safe and ethical innovations.

How is the World Economic Forum creating guardrails for Artificial Intelligence?

Policy approaches across regions

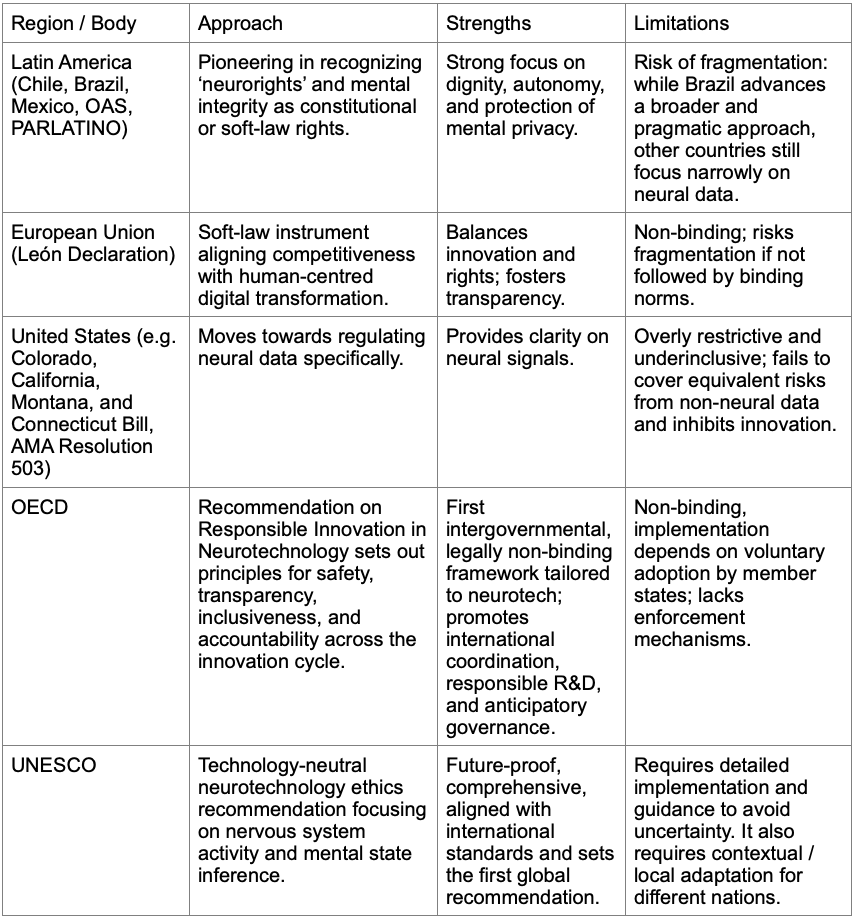

Different regions and institutions are experimenting with distinct approaches towards neurotechnology regulations. Comparing them helps us identify strengths and pitfalls.

Key examples:

Accounting for the fast-evolving technology and science, the regulatory debate needs to focus on protecting the integrity of the human mind and privacy overall, rather than focusing on the protection of the specific data category.

Legal scholars are also warning that the idea of building privacy protections on categories of ‘sensitive data’ is unsustainable. These categories are often arbitrary and unable to capture the reality of an inference-driven economy. Virtually any type of data, however innocuous, can be combined or analyzed to reveal sensitive attributes, such as health conditions, beliefs or political opinions. As technology and data analysis capacity evolve, so will the information that may eventually be decoded. Regulations that protect only certain data types risk being under-inclusive, shielding some risks while ignoring others.

Towards a technology-agnostic framework

Given the ultimate regulatory goal, a technology-agnostic approach stands out as the most effective way to provide comprehensive, future-proof protection, prevent regulatory arbitrage and avoid distortions to innovation.

To strike the right balance, policy should be principled yet pragmatic, it should:

1. Adopt technology-agnostic rules

Regulation should protect against harmful inferences, regardless of the data source. This avoids the trap of regulating based on technical categories and ensures relevance for the evolving technological landscape.

2. Ensure global alignment

Countries should avoid fragmented approaches. Latin America’s constitutional amendments, the EU’s digital agenda and UNESCO’s global recommendations can serve as complementary building blocks towards harmonization.

3. Complement with pragmatic tools

Beyond formal law, additional mechanisms can reinforce responsible practices. For example, the NeuroTrust Index (in development by the Global Future Council on Neurotechnology and Duke University’s Cognitive Futures Lab) offers a transparent framework to measure and promote responsible innovation while fostering public trust.

4. Balance protection with innovation

Policymakers must avoid artificial barriers that discourage research and investment. By focusing on harms and rights, a technology-agnostic framework ensures that people benefit from neurotechnology’s potential, without compromising dignity or freedom.

5. Implementation guidance

A technology-agnostic approach requires clear implementation guidance to avoid regulatory uncertainty. Frameworks should provide specific criteria for determining when data collection constitutes mental or other health state inference and establish proportional protections based on inference sensitivity and context of use. This clarity ensures companies can innovate thoughtfully while maintaining robust privacy protections.

As neurotechnology stands poised to address neurological conditions affecting more than three billion people worldwide, privacy frameworks must enable life-saving and life-improving innovation. A technology-agnostic focus on mental and health state inferences safeguards privacy and progress, ensuring breakthrough solutions reach end users while protecting against emerging privacy threats.

This article represents the consensus view of the Global Future Council on Neurotechnology