National Institutional Ranking Framework’s evaluations form the basis for many important decisions in Indian higher education, from admissions to recruitment. However, several of its important parameters are ill-designed, leading to absurd ratings. Given that most colleges target good performance in these rankings, it is paramount that NIRF ranking parameters are reviewed and defined more intelligently.

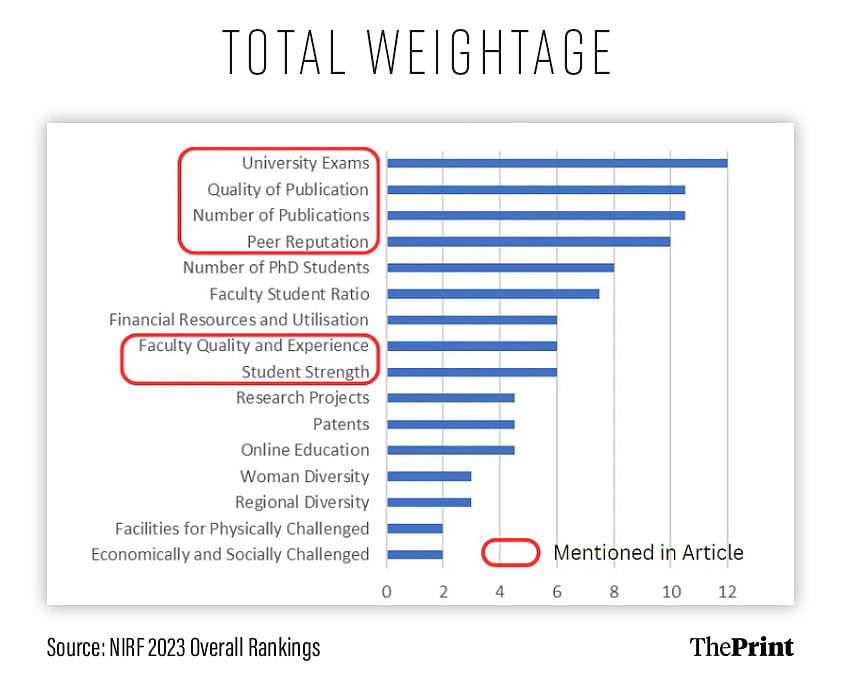

NIRF overall rankings in 2023 used 16 parameters to evaluate universities. In Fig. 1, we see the importance (weight) allocated to each parameter. Theoretically, these parameters represent a good spread of criteria. However, even the most important parameters are ill-designed.

Now let us look at some parameters in detail.

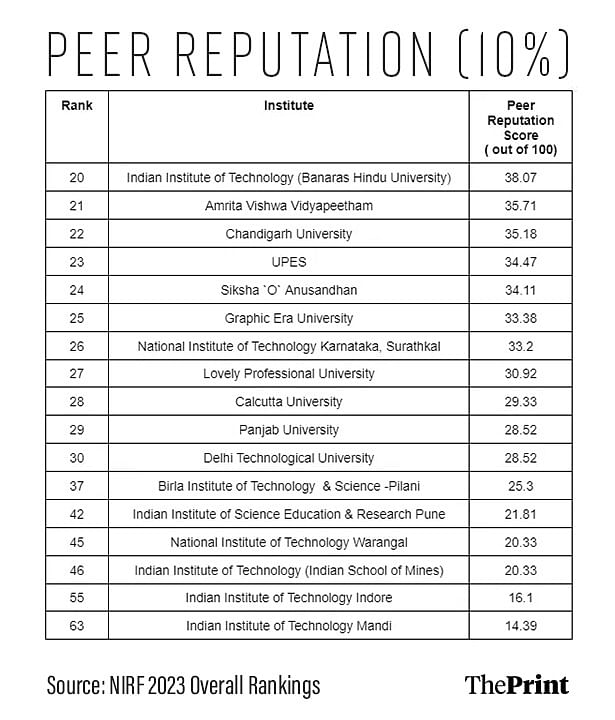

Peer reputation

This is one of the most opaque parameters in NIRF. It is done through a ‘survey’, no details of which are known. In Table 1, we see the rankings according to peer reputation for some of the colleges. It is surprising to see that some newly established private institutions have so quickly gathered more reputation than some old reputed institutes like BITS Pilani, Indian Institute of Technology (Indian School of Mines), Dhanbad, and National Institute of Technology, Warangal.

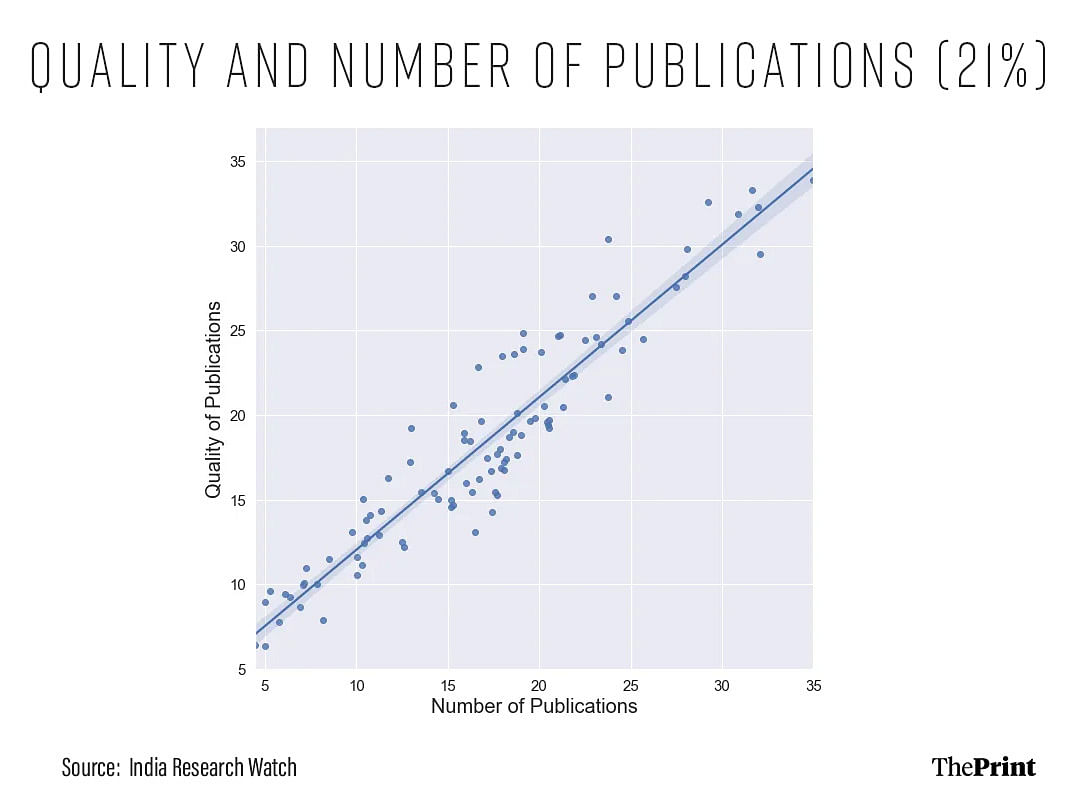

Quality and number of publications

Research is one of the most important parameters in university rankings. In Fig. 2, we see that two of the most important parameters, quality of publications and number of publications are very strongly correlated. Apart from being highly counter-intuitive, this means that just the number of publications is effectively being counted twice.

An explanation for this seeming paradox could be that universities are publishing more and self-citing. A case of an Indian university using self-citations to raise their rankings has been flagged by Science magazine. Giving so much importance to the number of papers has also led to an explosion of research misconduct. Retractions of articles from India have increased 2.5 fold in the period 2020–2022 as compared to 2017–2019.

A suggestion to improve this parameter would be to include a penalty for retractions due to research misconduct. This will help reduce the incidents of misconduct, as universities will have an incentive to ensure malpractices don’t take place.

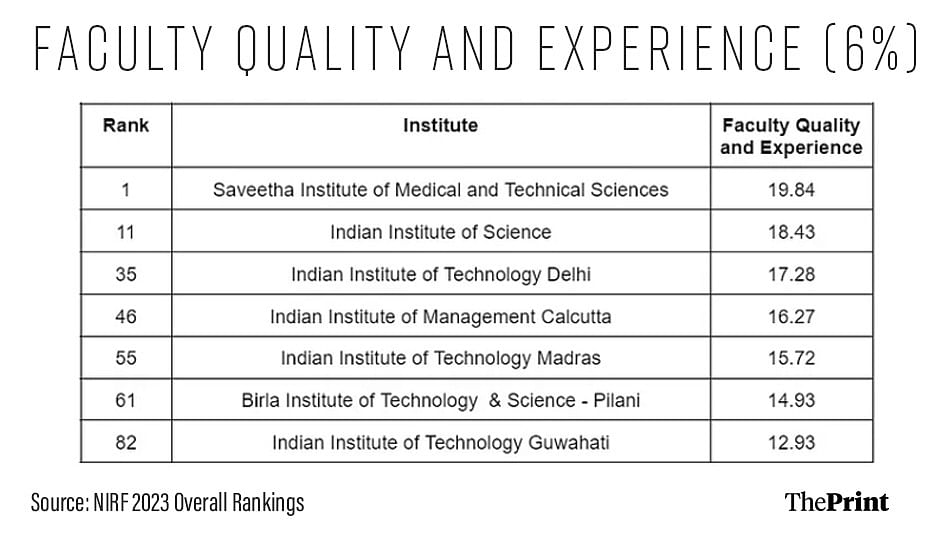

Faculty quality and experience

From students’ perspective, this is one of the most important parameters. However, given the definition of NIRF, Saveetha Institute has the best faculty quality in India (Table 2). Premier institutes like the Indian Institute of Science (11th) and IIT Madras (55th) ranking so low in the list really questions whether NIRF has defined this parameter properly.

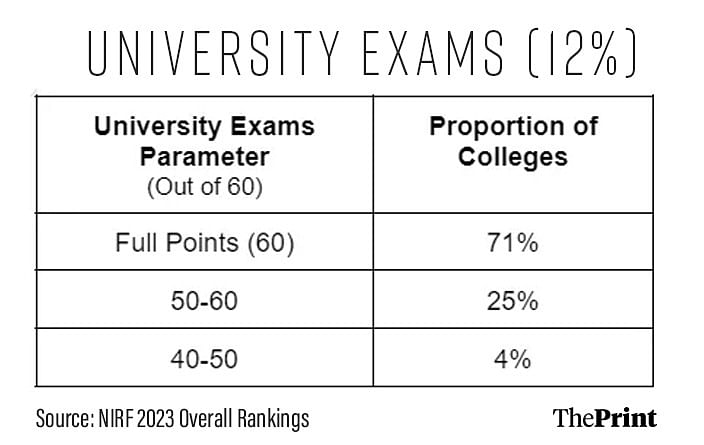

University exams

NIRF defines this parameter as the fraction of students that cleared all the exams within the stipulated time. If 80 per cent or more students clear all the exams, then the university gets full marks for this parameter.

In Table 3, we see that 71 per cent of the top 100 overall colleges got full marks for this parameter. This is not surprising, as it is highly unlikely (and worrying) if more than 20 per cent of the students are not graduating from college in time.

The most significant parameter of NIRF is thus also the most useless, as almost everyone gets full in it. This parameter should be defined in a way that helps measure something meaningful like the difficulty of the university exams using average grade point in an institute.

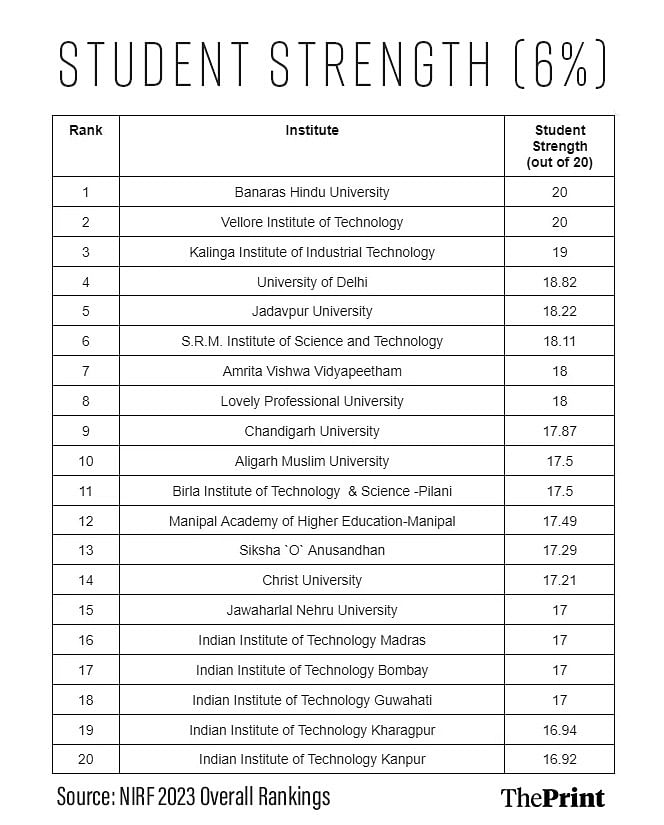

Student strength

Size (number of students) has become an important factor for universities to move up the rankings. In Table 4, we see that many private universities top this parameter. This is not surprising as private universities are expanding at a much faster rate than public universities.

We need to be careful about such uninhibited expansion, as sometimes, they might not come along with an increase in necessary infrastructure and human resources. It also puts public universities at a disadvantage.

Instead, it’s better to measure student intake quality based on scores in standardised entrance tests like the Joint Entrance Examination (JEE).

Data correctness

Apart from the parameters being badly defined, there are also questions about the correctness of the data submitted to NIRF by the universities. In a survey carried out by India Research Watch with 410 respondents (Fig. 3), most people (39 per cent) thought that the data submitted to NIRF could be wrong. Another 36 per cent said that paper quantity has become too important. We also showed earlier the reason why it is so, as number of publications effectively counts for 21 per cent of NIRF.

Recommendations

Complete review of parameters: As shown in this article, many of the parameters are ill-defined. All of them need to be reviewed to ensure that they are not being gamed or generating an unhealthy race.

Data verification teams: Since the data submitted forms the basis for the rankings, it is important that they are correct. All the anomalies must be audited by a competent team to ensure the data submitted is correct.

Greater transparency: All the raw data for all the colleges (even those not in the top 100) should be released in a tabular format (csv). Additionally, the details for the methodology of the survey used to calculate peer reputation must be made public. Finally, there are some functions that are used to calculate composite scores, which NIRF has kept a secret. In the interest of transparency, they should divulge the functions.

Like in the case of the National Testing Agency, we hope the government forms a competent committee and orders a complete review of NIRF rankings. Given that the future of so many students and academics depends on it, it is important to get it as right as we can. We can do much better than the current state of affairs.

Achal Agrawal is the founder of India Research Watch. Moumita Koley is a research associate at DST-CPR, IISc. Views are personal.

(Edited by Humra Laeeq)

Dear Editor,

I am writing in response to your recent article ( NIRF parameters ill designed & lead to absurd university ratings. Govt must order a review – dated 08/07/2024 ) regarding the NIRF ranking parameters, in which it was mentioned that Saveetha University has the highest faculty quality score in the country. The article implied scepticism about how a young private university could outperform older, established institutions like the IITs in Faculty Quality Evaluation (FQE) values. I would like to clarify some points and highlight the unique aspects of Saveetha University that contribute to our high rankings:

1. 100% PhD Faculty: Saveetha University is the only university in the country where every faculty member holds a PhD. There are no non-PhD holders in our teaching roster. This alone sets a high benchmark for faculty quality and academic rigour.

2. Faculty to Publication Ratio: Our faculty has an outstanding publication record, with around 10,000 publications for 900 faculty members, resulting in a ratio of over 1:10. In comparison, other institutions have significantly lower ratios, with the next highest being around 1:4. This demonstrates our faculty’s prolific contribution to research and academia.

3. High-Quality Publications: We pride ourselves on having the highest cumulative impact factor and the largest number of Q1 publications in the country. This challenges the notion that a higher volume of publications equates to lower quality. Our research output is not only prolific but also highly impactful.

General Observations:

The article mentioned concerns about the volume of publications and associated retractions. It is important to note that with a hundredfold increase in publications in India, a threefold increase in retractions is not alarming. A more accurate metric would be the percentage of retractions rather than the proportional increase.

While we agree that transparency in certain metrics, such as perception, is necessary, we stand by the solidity of our faculty metrics. In fact, we believe that our scores could be even higher, and the current NIRF evaluation may not fully reflect our achievements.

Articles like yours should consider these aspects to avoid unfairly diminishing the hard work of our faculty and students. Accurate representation of metrics and achievements is crucial to ensuring that institutions like Saveetha University receive the recognition they deserve.

Thank you for considering our perspective.