New Delhi: Artificial intelligence chatbot ChatGPT has shot to popularity since its launch last November, with millions of users — including cybercriminals. They’re using the tool to quickly create more realistic and convincing messages in different languages, according to cyber safety brand Norton.

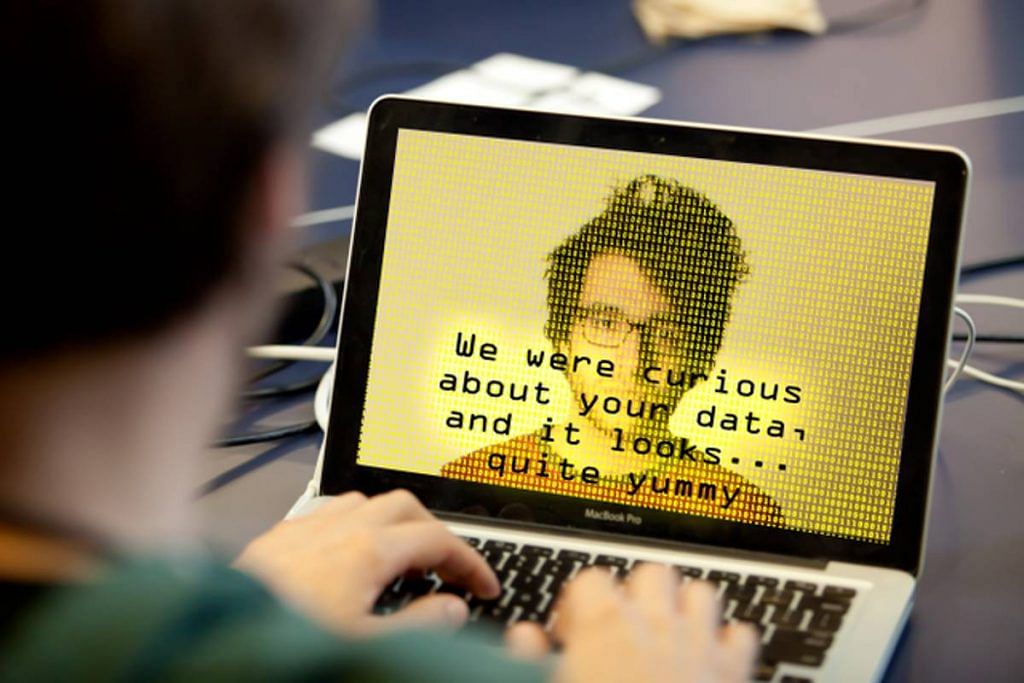

“…Cybercriminals are using it (ChatGPT) to generate malicious threats through its impressive ability to generate human-like text that adapts to different languages and audiences. Cybercriminals can now quickly and easily craft email or social media phishing lures that are even more convincing, making it more difficult to tell what’s legitimate and what’s a threat,” says Norton, which is part of Nasdaq-listed software firm Gen Digital.

In its quarterly Consumer Cyber Safety Pulse Report released Tuesday, Norton said that in addition to writing lures — the bait used to hook victims in a phishing scam — ChatGPT can also generate code. Just as ChatGPT, developed by US research lab OpenAI, makes developers’ lives easier with its ability to write and translate source code, it can also make cybercriminals’ lives easier by making scams faster to create and more difficult to detect.

“I’m excited about large language models like ChatGPT, however, I’m also wary of how cybercriminals can abuse it. We know cybercriminals adapt quickly to the latest technology, and we’re seeing that ChatGPT can be used to quickly and easily create convincing threats,” Kevin Roundy, senior technical director of Norton, said in a statement Tuesday.

Besides using ChatGPT for more efficient phishing scams, Norton experts believe it can also be used to create deepfake chatbots. These can impersonate humans or legitimate sources, like a bank or a government entity, to manipulate victims into turning over their personal information to gain access to sensitive information, steal money or commit fraud, Norton explained.

To keep themselves safe, Norton advised internet users to avoid chatbots that do not appear on a company’s website or app, and to be cautious of sharing any personal information when they are chatting with someone online. It also asked users to be cautious before clicking on links in response to unsolicited phone calls, emails or messages, and to update security solutions.

According to the report, over the course of 2022, Norton thwarted more than 3.5 billion threats in total or around 9.6 million per day globally. Of these, 90.9 million were phishing attempts, 260.4 million file threats and 1.6 million mobile threats. Further, Norton AntiTrack blocked over 3 billion trackers and fingerprinting scripts.

Fingerprinting refers to a type of online tracking that is more invasive than the usual cookie-based tracking.

In India, from October through December 2022, Norton blocked close to 96,000 phishing attempts and 1.6 million file threats. In the last quarter alone, Norton blocked over 13 million threats, or around 143.4 thousand threats per day in India.

(Edited by Geethalakshmi Ramanathan)

Also Read: ‘Inaccurate references & cumbersome’, say students. Why ChatGPT might be losing its sheen